There is a future, it seems, where thoughts are no longer just fleeting images in our minds, but can become HQ videos. And it seems like a future that is increasingly closer to reality. A group of skilled researchers has just opened Pandora's box in the field of neuroscience. To help him, a good dose of AI.

The “projector” brain

Jiaxin Qing, Zijiao Chen e Juan Helen Zhou, from the National University of Singapore and the Chinese University of Hong Kong, presented some rather interesting research work. The team combined data obtained from functional magnetic resonance imaging (fMRI) with Stable Diffusion generative artificial intelligence to create MinD-Video, a model that can generate HQ videos directly from brain readings.

Science fiction stuff, you might say: but no, all rigorously documented on arXiv, e this is the link.

How exactly does MinD-Video work?

MinD-Video is not a simple video generator, but an entire system designed to make the decoding of images done by an AI and that done by a brain communicate. But how do you train such a system?

The researchers used a public dataset, containing videos and associated fMRI readings of subjects who watched them. And apparently the job worked out admirably.

See thoughts, we got there

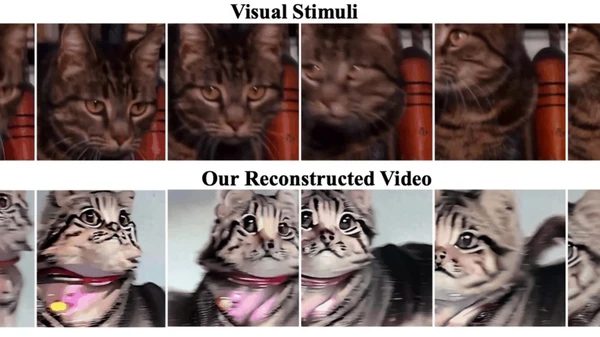

The videos published by scientists show truly fascinating results. Take for example an original video featuring horses in a field. MinD-Video “rebuilt” it by creating a more vibrant version of the horses. In another case, a car drives through a wooded area and the reconstructed video shows a first-person journey along a winding road.

According to the researchers, the reconstructed videos are of "high quality", with well-defined movements and scene dynamics. And the precision? 85%, a significant improvement over the previous attempts.

Mind reading and HQ video, what's next?

“The future is bright, and the potential applications are immense. From neuroscience to brain-computer interfaces, we believe that our work can have an important impact” declared the authors. And the findings don't stop there: their work highlighted the dominant role of the visual cortex in visual perception, and their model's ability to learn increasingly sophisticated information during training.

The Stable Diffusion model used in this new research makes the visualization more precise. “A key advantage of our model over other generative models, such as le GAN, is the ability to produce higher quality videos. It takes advantage of the representations learned from the fMRI encoder and uses its unique diffusion process to generate HQ videos that better align with the original neural activities,” the researchers explained.

In short, it seems that we have truly entered the era of mind reading through artificial intelligence. A field open to a thousand possibilities, where the limit seems to be only the imagination.