A million times science fiction has imagined a fully automated Earth where robots collaborate with humans in every possible way.

From time to time we paint a robotic world where machines help us clean up the damage we have done (WALL-E). Or a post-apocalyptic result of artificial intelligence that sees humans as a plague to be eradicated (Terminator). Or a world where humans and robots coexist peacefully (Bicentennial Man).

If we were to evaluate the prevalence of positions overall, I would say that most people tend to consider the dangers more than the opportunities. 1000 years from now, our imaginations see a robotic future a bit more like an apocalypse, and decidedly different from an earthly paradise.

Robotic future: why this pessimism?

We tend to compare robotics and AI research to other similar technological advances that have often had appalling results. In fact, work on atomic energy began with bombs, continued with nuclear power plants (here too lights and shadows) and has not yet shown aspects of dazzling brightness.

To gain everyone's trust, the robotic world must be designed from the ground up with precise rules on its relationship with humanity. The artificial intelligence of a robot must be able to recognize humans and avoid harming them at all costs. Do you know Asimov?

It is not easy, if you consider that as you read this article the USA, Russia, China and other countries are developing programs for drones and robots capable of killing people. Even independently.

The robotic problem is all there

We are about to enter an important era, but we continue to ignore the advice of two generations of scientists. People who have been investigating and studying the problems of a robotic future for years. Geniuses, visionaries and pioneers of technology such as Kurzweil, Musk, Hawking, Harari, gawdat, Asimov, Vinge, and many others have been warning us about the inherent dangers of AI and robotics for decades.

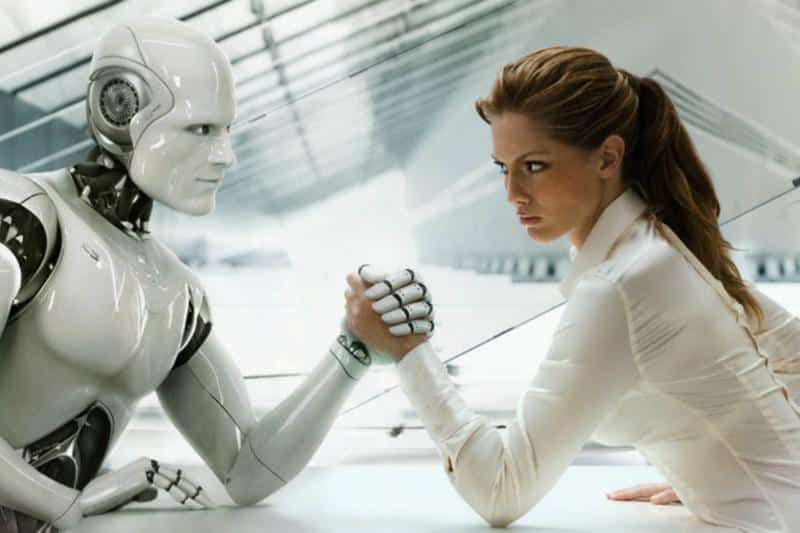

I, like everyone, don't want an Earth dominated by killer robots. I'd like a world like the Jetsons more ("The great grandchildren"). A robotic world where our creations work alongside us to make things better: if today's work focuses only on the military uses of robots, however, we must prepare for a very dark future.

We are tormented by the possibility of robots and AI becoming aware of themselves and attempting to conquer the world or wipe us out because we are simply aware that this is a possibility. And we also know ourselves: humans have an extraordinary ability to create new ways to put themselves in danger.

To avoid this we have only two options

First option: abandon and / or make everything illegal (and I mean everything) regarding artificial intelligence, the real brain of a robotic future. Would work? Just like any effort to control, for example, nuclear weapons or ethically questionable medical research: no. The human desire to satisfy one's curiosity and obtain advantages is inextinguishable. That's how we are done.

Second option: ensure that everyone realizes the potential dangers and build a framework within which any robotic product is unable to do any harm to a human. A form of moral sense? If we want. Someone, however, is already trying.

The second option, needless to say, seems to be the only viable option. Especially if we are on the verge of a Singularity. Our metaphorical "children" of a robotic world will quickly outgrow our ability to control them. We may have no guarantees that they will abide by our rules. We risk being placed in the same position that we now give, for example, to a gorilla. An animal close to us, yet with very limited possibilities.

For this it is better to instill in them a moral sense (a moral sense that we should all follow first) hoping that the robotic future will follow it and that the devices made have their own "common sense".