In a bright environment, full of monitors and technological equipment, a robot stands as the protagonist. Its metal structure reflects light, but it is in its "eyes" that the true magic is hidden. These eyes, powered by DeepMind's RT-2 model, are capable of seeing, interpreting and acting.

As the robot moves gracefully, the scientists around it scrutinize its every move. It's not just a piece of metal and circuitry, but the embodiment of an intelligence that unites the vast world of the web with tangible reality.

The evolution of RT-2

Robotics has come a long way in recent years, but DeepMind it just took the game to a whole new level. Illustrated in a paper just released it arrives RT-2. Things? It is a vision-language-action (VLA) model that not only learns from web data, but also from robotic data, translating this knowledge into generalized instructions for robotic control.

In an era where technology advances by leaps and bounds, the RT-2 represents a significant leap, promising to revolutionize not only the field of robotics, but also the way we live and work every day. But what does this mean in practice?

DeepMind RT-2, from vision to action

The models of high capacity vision-language (VLM) they are trained on large datasets, and this also makes them extraordinarily good at recognizing visual or linguistic patterns (operating, for example, in different languages). But imagine being able to make robots do what these models do. Indeed, stop imagining it: DeepMind is making it possible with RT-2.

Robotic Transformers 1 (RT-1) it was a marvel in its own right, but RT-2 goes further, displaying enhanced generalization capabilities and semantic and visual understanding that goes beyond the robotic data it has been exposed to.

Chain reasoning

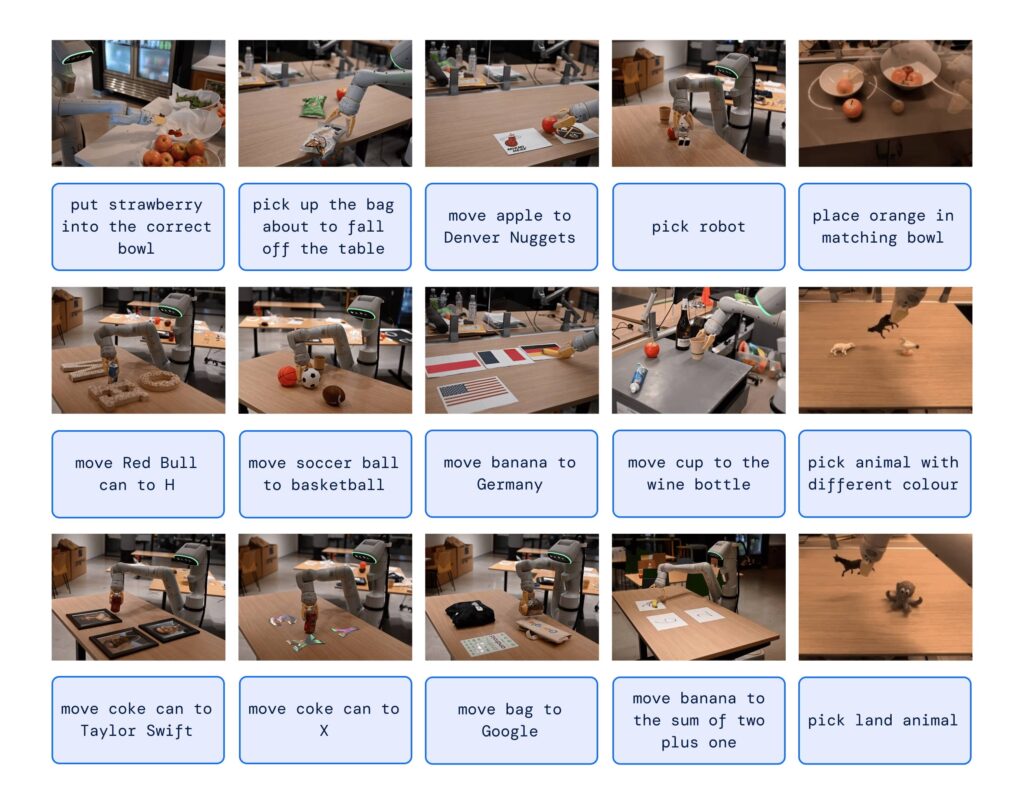

One of the most fascinating aspects of RT-2 is its chain reasoning ability. He can decide what object could be used as a makeshift hammer or what kind of drink is best for a tired person. This deep reasoning ability could revolutionize the way we interact with robots.

And worst of all, you could still ask a robot to prepare you a good coffee to regain some clarity.

But how does DeepMind RT-2 control a robot?

The answer lies in how he was trained. In fact, it uses a representation not unlike the language tokens that are exploited by templates like ChatGPT.

RT-2 demonstrated amazing emergent capabilities, such as symbol understanding, reasoning and human recognition. Skills that currently show an improvement of more than 3x compared to previous models.

With RT-2, DeepMind not only showed that vision-language models can be transformed into powerful vision-language-action models, but it also opened the door to a future in which robots can reason, solve problems and interpret information to perform a wide range of tasks in the real world.

And now?

In a world where artificial intelligence and robotics will be increasingly central, RT-2 shows us that the next evolution will not be purely technical, but "perceptual". Machines will understand and respond to our needs in ways we never imagined.

If this is just the beginning, who knows what the future holds.