This post is part of "Periscopio", the Linkedin newsletter which every week delves into Futuro Prossimo themes, and is published in advance on the LinkedIn platform. If you want to subscribe and preview it, find it all here.

A completely robotic world, in which machines collaborate with humanity in all possible ways, has been recurring for years in science fiction works: books, films, games. And as always, it is told in a polarized way.

Will we find ourselves in a global landfill where machines help us search for the lost meaning of life among waste and scrap? In a post-apocalyptic scenario dominated by artificial (and hostile) intelligence? Or on a planet where humanity and machines coexist in a robotic and vaguely aseptic equilibrium (like Asimov, to understand)?

The shape of the future we imagine

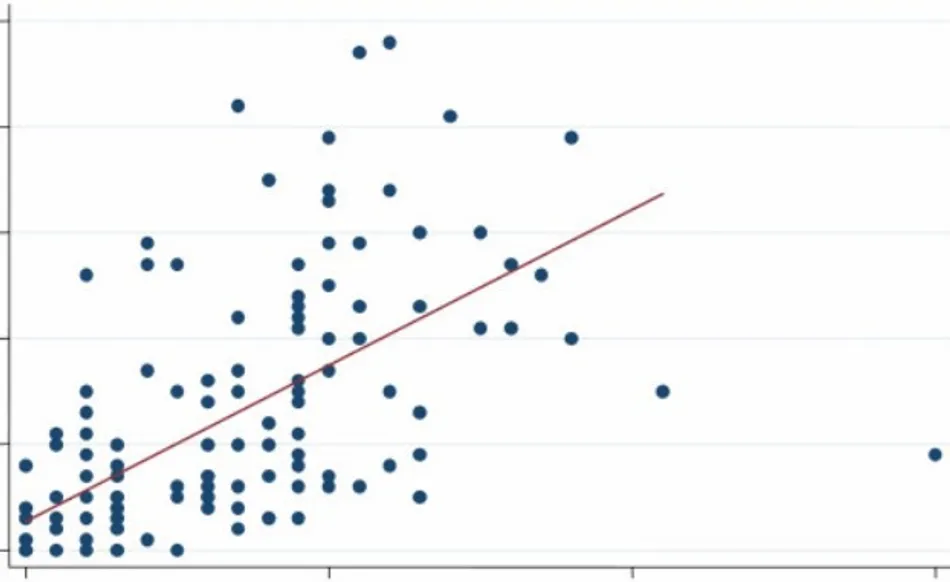

If we put all our versions of the robotic future on one graph, we could get a look like this:

Let's not deny it: most of our views are pessimistic. Are we right to fear the worst? Let's compare the robotics and research onartificial intelligence (will increasingly go hand in hand) with other technological advances of similar magnitude. An example above all: research on atomic energy. They started with a bomb, driven by military programs. And guess what happens today?

Nothing new. Unfortunately.

All actors working today in the field of robotics and AI generally agree that we must design machines with precise ethical rules, which privilege human life. Any robotic device equipped with artificial intelligence must be able to recognize humans and avoid harming them. At any cost.

It's really like this? As I write to you, the US, Russia, China and other countries are all conducting programs to create (and deploy) AI-driven drones and field robots to kill people.

Robots similar to the one in the video below will be able to “direct” themselves.

It is a dramatic recurrence: once again, even before we entered the robotic age, we are ignoring the advice of two generations of scientists and futurists who have been thinking about these problems for years and years. Uselessly?

The robotic world we want is another

“We, the people”, someone would say, we do not want an Earth dominated by killer robots. With our mind we fear dystopia, with our heart we desire utopia.

We want a world where robots and artificial intelligence help us make everything better. But to get there, we could go through hell if military targets are once again driving the robotic future.

NEVER underestimate science fiction. Inspire the future, but also photographs the present. There's a reason why so much of our science fiction revolves around robots becoming self-aware and trying to take over the world or eliminate humans.

We recognize this is a possibility, but it is not the fault of the machines. It is ours. We are the ones who excel at always finding new ways to endanger ourselves. Is it distrust of our species? Or intimate knowledge of its limits? Both things.

Two ways to avoid disaster

Abandon and/or ban all work on artificial intelligence? It probably wouldn't work. Just like any attempt to control nuclear weapons or ethically questionable medical research.

Some will agree, many will not. And it only takes one to work against it: the human desire to satisfy curiosity and gain an advantage over others is insurmountable.

Second: educate, educate, educate. Educating men and machines. Try to ensure that everyone understands the potential dangers, and builds a social, political, cultural, but above all technological framework of reference.

A framework in which the robotic world is not a place hostile to humans. Whether it arrives or not, the Technological singularity it is by definition something violent and sudden, an important passing of the baton.

If we don't prepare the ground well, losing control will not be a possibility but a natural and sudden certainty. The evolution of machines will be out of our control and we will find ourselves in a position similar to that in which we placed the lowland gorilla or the giant panda.

For this reason I applaud the first, almost touching efforts of technicians and scientists for “instill” a morality in machines that we ourselves do not know how to respect.

We need to do it before these machines open their eyes and show a spark. We have to do it, so that it is a spark of love: that maybe it also teaches us something about ourselves.