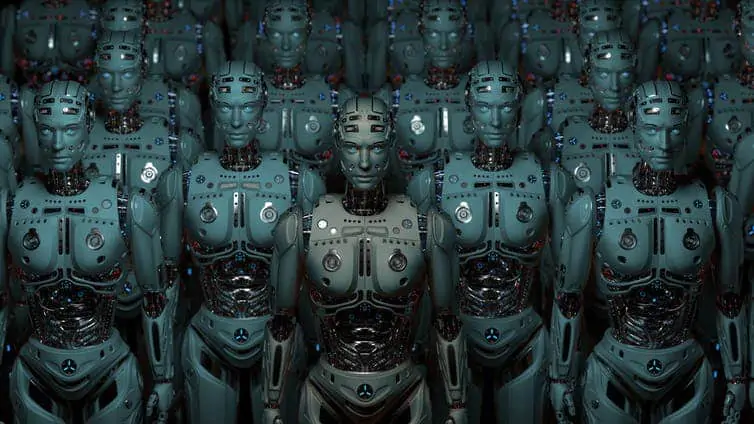

Future wars will be faster and more technological, but less human than ever: welcome to the era of robot soldiers.

There is a perfect place to test advanced weapons: the Wallops Islands, a small patch of land off the coast of Virginia that seems to have come straight from Asimov's pen. If a fishing boat had passed there a year ago he would have seen half a dozen dinghies circulating in the area: a closer look would have revealed that the dinghies had no one on board.

The boats used an engine that could adjust based on context and transmit actions and positions to all the others, instantly organizing the fleet according to a strategy. Even shooting, perhaps to defend troops stationed on the shore.

The semi-secret effort, part of a US Navy program called Sea Mob, is a way to make it clear that machines equipped with artificial intelligence will soon be perfectly capable of moving even lethal attacks without any human supervision and in contempt of robotics laws.

Formulated by Isaac Asimov, one of the fathers of science fiction fiction, the three laws of robotics they were published for the first time in a 1942 short story. In his novels the Russian-American writer often mentions them: they are rigid principles, not to be transgressed, theorized to reassure humanity about the good "intentions" of robots.

Who knows if Asimov had foreseen that his 'laws' would risk falling apart as is about to happen.

Automatic death

The idea of sending large, deadly, autonomous machines into battle is not new: Similar systems have been tested for decades, even if of a defensive nature, such as those that allow coverage from hostile missiles. The development of artificial intelligence will allow the creation of offensive systems that will not simply respond to stimuli, but will decide on their own what to do, without human input.

It takes a human a quarter of a second to react to seeing something (imagine the time it takes a goalkeeper to decide where to throw himself during a penalty). The machines we have created already outperform us, at least in speed. This year, for example, researchers at Nanyang Technological University, in Singapore, trained a neural network to absorb data from 1.2 million images. The computer tried to identify subjects in photos, and it took 90 seconds. 0.000075 seconds per photo.

For now there is still a lot to do: at this incredible speed the system identified the person in the photo only in 58% of cases. A success rate that would be disastrous in battle. However, the fact that machines are able to move much faster than us is an established fact, and it will only increase. In the next decade the new generation of missiles will travel at the suborbital level, too quickly for one man to decide how to counter them or manage the situation. Flocks of autonomous drones will attack, and other computers will respond at supersonic speed.

“When war becomes so fast, at what point does the human being become an obstacle?” he wonders Robert Work, Deputy head of the Pentagon with both Obama and Trump. “There is no way for us to intervene, so we will delegate to the machines.” Other than Asimov, in short.

Weapons that make themselves

Every military aspect today sees research committed to guaranteeing a more rapid, more precise, less humane type of war possible.

The marina American tests a 135-ton ship called Sea Hunter, which patrols the oceans without any crew, looking for submarines that one day will be able to shoot down directly. In one test, the ship traveled 4000km from Hawaii to California without human intervention, doing it all by itself.

The army meanwhile it develops new "point and shoot" systems for its tanks, and a missile system called JAGM, Joint Air-to-Ground Missile. JAGM has the ability to choose vehicles to target without human intervention. In March, the Pentagon requested funds from Congress to build 1051 JAGMs at a cost of 360 million euros.

And aviation? The tests are all focused on the “SkyBorg” project (the name says it all): an unmanned version of the F-16 capable of fighting on its own.

Until yesterday, soldiers who wanted to cause an explosion at a remote site had to decide how and when to strike: with a plane? A missile? A ship? A tank? They then had to direct the bomb, aim it, operate a command.

Drones and systems like Sea Mob are completely cutting humans out of such decisions. The only decision that remains for a military command (but it should be made not before and not against everyone's consensus) is when the robots are left free to kill violating Asimov's famous laws, perhaps starting from the moments when radio communications are interrupted during a war operation.

It is not a matter restricted to the United States alone

Since the 90s Israel began designing a system called HARPY, which today we would call artificial intelligence. The drone was capable of flying over areas covered by radar facilities and attacking them independently. The company then sold this system to China and other countries.

In the early 2000s, theEngland developed the Brimstone missile, capable of finding enemy vehicles on the battlefield and “establishing” which ones to hit.

Last year, 2018, the President of the Russia Vladimir Putin spoke of an underwater drone equipped with nuclear weapons, opening up a scenario in which an automatic device could be equipped with the most lethal weapon ever created by humans. And on the other hand, Putin himself admitted that the nation is capable of developing the best AI “she will become master of the world”.

La China it hasn't made any grand declarations, but the fact that it is at the forefront of artificial intelligence still gives pause for thought. It is estimated to become the leading country in this field in the next 10 years, and may soon exploit this militarily.

The technological cold war

The fear of being overtaken by countries like China or Russia has pushed the USA to spend heavily on the development of AI: 600 million dollars in 2016, 800 in 2017 and over 900 this year. The Pentagon has not revealed details about these efforts, but from official interviews it is easy to deduce that many solutions will arise from the developments of AI research military technology, even if not immediately ready to take command completely.

Yet it can happen

The intense economic investment that the USA is making to regain the certainty of technological (and therefore military) supremacy multiplies the risks of forcing the machines' hand, or letting them force it. Robert Work says it clearly: “there will be a moment when the machines will 'make a mistake' induced by us, because we are not aiming to have the perfect machine, only the most useful one in the shortest time possible”.

The idea that machines can intervene completely on their own to decide to kill human beings by violating Asimov's laws of robotics still belongs to an imagination that totally rejects it: something close to apocalyptic films like Terminator. But these are not distant possibilities.

In a planet of increasingly competitive nations in this field, the possibility that autonomous machines can cause damage to opponents also in terms of human lives is real. It is not nice to say, still less to hear, but it is so.