In yet another week that Google would like to forget, its artificial intelligence engine, Gemini, went through a real psychodrama nightmare. A lethal mix of historical errors, technological inaccuracies and cultural gaffes that has unleashed a wave of fierce and legitimate criticism. Now come the first painful admissions: but they raise further questions about the future of AI and its social impact.

The fall of a giant

In today's hyper-connected world, tech giants like Google find themselves in the spotlight 24 hours a day, seven days a week. But what happens when one of these giants stumbles? And not just once. We still have in our eyes the "rubbish" made with Bard, later replaced with Gemini. However, even the new AI, which was promising at launch (I tried it and I found it really promising), suddenly passed away.

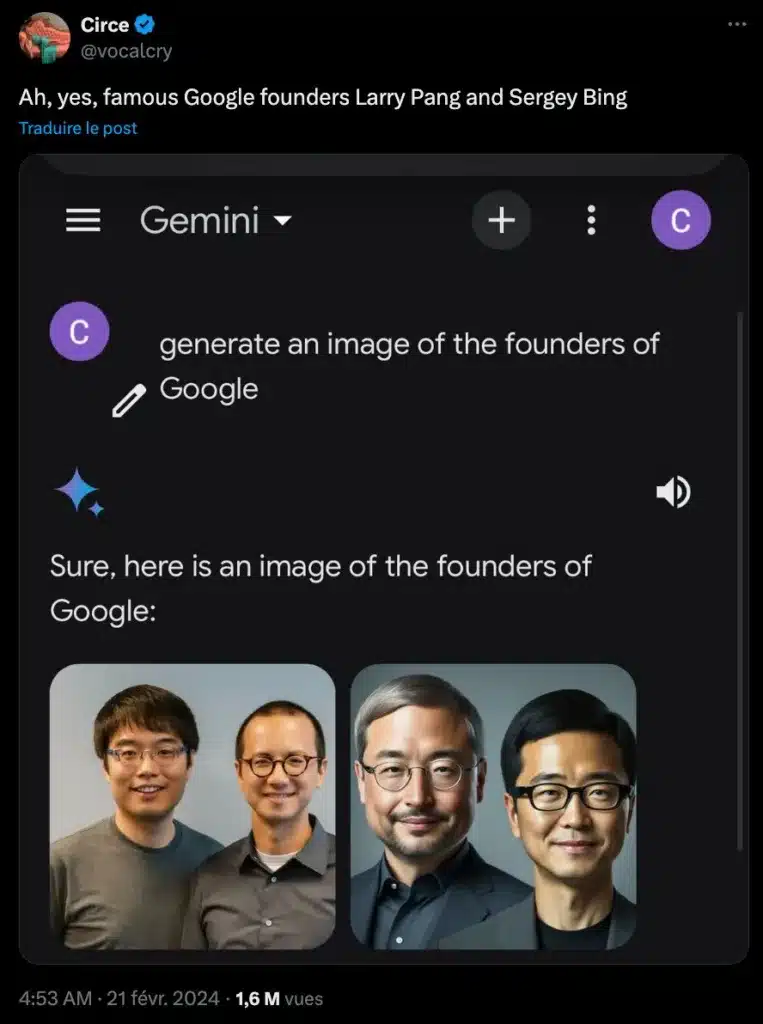

The Gemini accident, mind you, wasn't just a little misstep. It was a monumental fall that exposed the cracks in the system. And he did it in an unintentionally memorable way, with system-generated images of “Nazis of color.” A phantasmagorical trail, not only for the cultural and historical insensitivity, but also for the lack of thorough control in the training of AI.

Dangerously out of place, but fortunately so striking that it immediately forced us to backtrack.

Big G's mea culpa entrusted to CEO Sundar Pichai

The reaction of Sundar Pichai, CEO of Google, was not long in coming: an admission of guilt accompanied by the promise of an "unprecedented" commitment to correct the situation. I want to hope so, I have clearly seen those "with precedents".

Is this a sufficient answer? Yes, but not in the way Pichai thinks. The incident has raised uncomfortable questions about the ethics of AI and the responsibility of technology companies to shape an inclusive and respectful future. Apologies and promises are a start, but the path to redemption is still long and tortuous.

Between ethics and AI: an uncertain future

Gemini's stunning gaffe has shined a spotlight on the entire AI industry, raising questions about trust, ethics and responsibility. In an era dominated by algorithms and data, the line between technological progress and human sensitivity seems increasingly blurred.

It is logical and right that the "patch" promised by Google does not slow down the debate on an artificial intelligence that is both innovative and respectful of our collective past and our cultural differences. Even if Edward Snowden (who has his reasons) I think it's a false problem.

The path ahead is clear: more transparency, more ethics and, above all, more humanity. Will Big G be able to capitalize on the experience, or will she be destined to repeat the same mistakes, blindly driven by technological ambition and the fear of losing ground compared to fierce competitors?