The next time you buy a new sofa, you could "touch" its new fabric with your fingers directly online.

The doctor Cynthia Hipwell at Texas A&M University is leading a team working to better define how the finger interacts with a device. The target? Contribute to the development of new generation touch technology, based on a touchscreen.

The research of the team was recently published in Advanced Materials.

A touch screen touch to touch and retouch

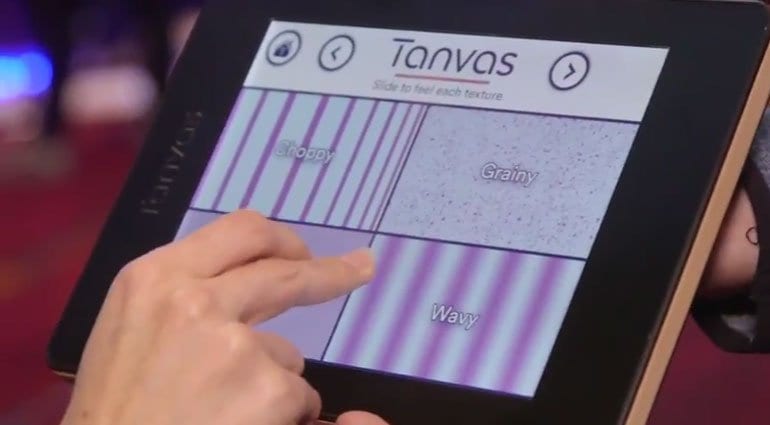

The ultimate goal, as mentioned, is to give touch devices the ability to provide users with a richer touch-based experience, mimicking the sensation of physical objects. Hipwell shared examples of potential implementations of haptic technology. They range from a more immersive virtual reality platform to tactile viewing interfaces like those in a vehicle dashboard. Even a virtual shopping experience that would make the user feel the texture of the materials before purchasing them.

“This could allow you to actually feel textures, buttons, slides and knobs on the screen,” Hipwell says.

The top would be to bring tactile technology into shopping so that you can feel the texture of fabrics and other products as you shop online.

Cynthia Hipwell

Touch the web

Hipwell explained that at its essence, the “touch” in current touch screen technology is more for the benefit of the screen than the user. With the emergence and refinement of increasingly sophisticated touch technology, the relationship between user and device will be more reciprocal.

The addition of these sensory inputs will ultimately enrich virtual environments and lighten the communication burden currently supported by audio and images.

When we look at virtual experiences, right now they are primarily audio and visual. In the advent of “metaverse coming soon”, bringing touch into human-machine interfaces can provide many more possibilities. A touch screen can attract attention more discreetly, and avoid an overload of audiovisual stimuli.

Work on the touch screen

The commitment of researchers at the University of Texas concerns the so-called "Multiphysics" of the interactions between fingers and device. Multiphysics is the complex of processes that occur simultaneously, involving multiple physical fields: needless to say, these are very complex flows, which change from user to user, and from condition to condition.

It's not just about the mechanics of touch: the type of movement, its intensity, the electrostatic charge of a finger. While for colors and audio the perception can be more univocal (interpreting a word, or distinguishing a color), in the case of tactile technology there are more parameters. The team's work aims to create predictive models that have the maximum tactile effect without too many environmental variations.

Will they ever succeed? Both the research and development of this technology continue to progress, and I believe that consumers will begin to see the first elements implemented in common devices within the next 5 years. Some of the first products are already under development.