A research team has created an artificial intelligence that draws what a person is looking at in real time simply by reading and decoding their brainwaves.

The most important thing is that it is a non-invasive technique, capable of collecting and coding all information on brain waves with an earphone connected to an electroencephalography (EEG).

“Researchers thought that studying brain processes using EEG was like trying to guess a person's shape by analyzing their cigarette smoke,” says the researcher Grigory Rashkov in a statement. “We didn't expect it to contain enough information to even partially reconstruct an image seen by a person. Yet it turned out to be entirely possible. “

The research

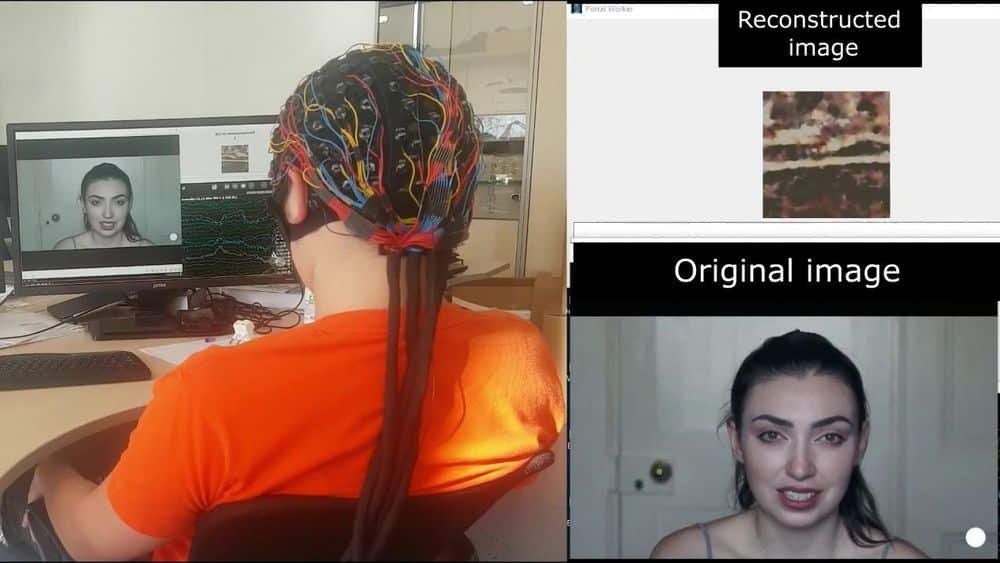

The joint team made up of members of the Moscow Institute of Physics and Technology and the Russian company Neurobotics began their study by placing a special headpiece with electrodes, which can record their brain waves.

In the second phase, each participant observed 20 minutes of video fragments lasting 10 seconds each, divided into 5 categories. After the first few sessions, researchers already realized that they could tell which category of videos the volunteers were watching, simply by looking at their EEG data.

In the third phase, scientists developed two neural networks: one trained to generate images in three of the video categories and the other to transform the EEG data into comparable noise. Put in competition, the two GAN networks have produced images that are surprising.

“With today's technology, invasive neural interfaces such as those under development by Neuralink, Elon Musk's company, must face the challenges of complex surgery and rapid deterioration due to natural processes. The systems can oxidize and fail in a few months,” says Rashkov.

“With this study we aim to design more convenient neural interfaces that do not require implants.”