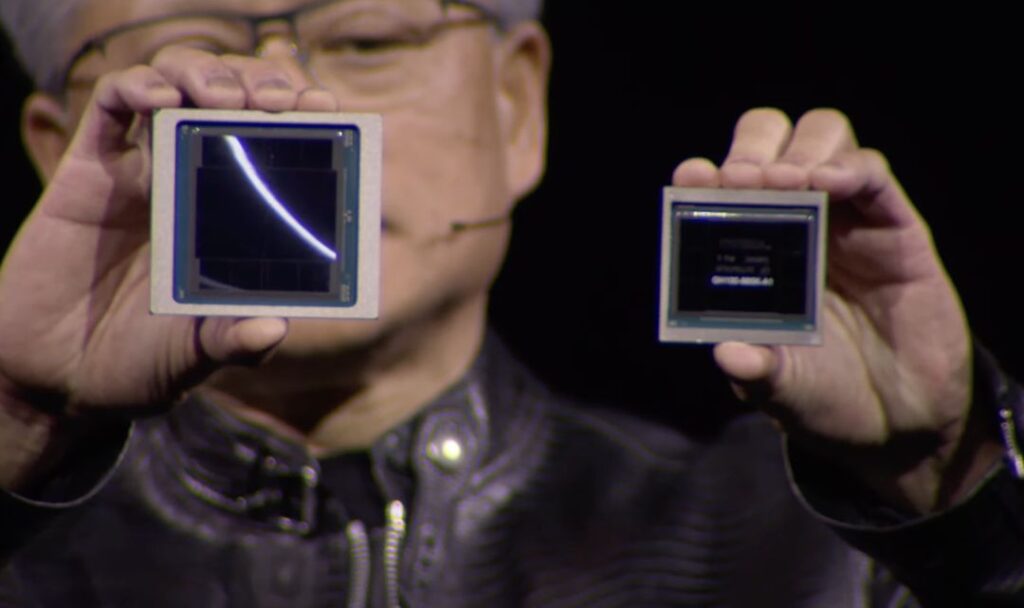

Nvidia, the GPU giant that has conquered the world of artificial intelligence, shows no signs of slowing down. On the contrary, with the presentation of the new Blackwell B200 GPU and the GB200 "superchip", seems intent on further extending its lead over the competition.

These monsters of computing power, with up to 30 times more performance than the already impressive H100, promise to redefine the horizons of AI and consolidate Nvidia's dominance in an increasingly strategic and competitive sector.

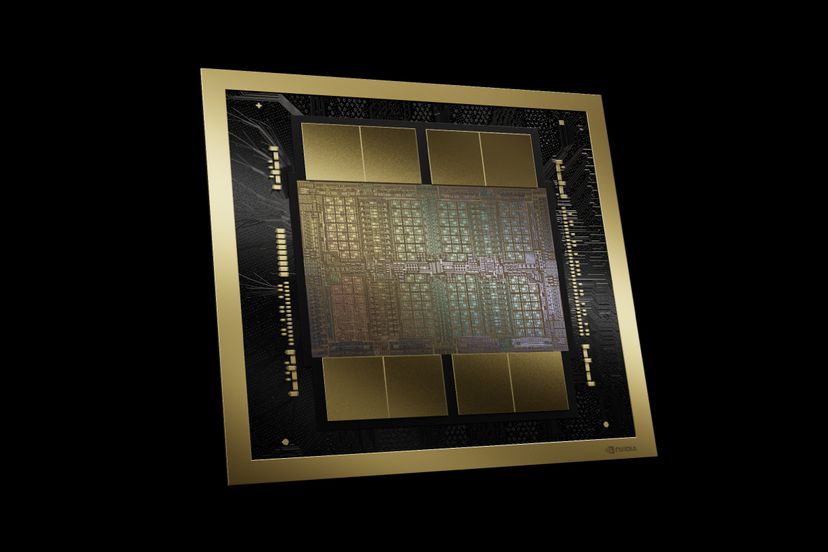

Blackwell B200, 208 billion transistors and 20 petaflops of pure power

The numbers make your head spin. The new B200 GPU packs a whopping 208 billion transistors (yes, you read that right, billions) into a single chip, unleashing up to 20 petaflops of 4-bit floating point (FP4) computing power. To give you an idea, this is a power comparable to that of 20 million high-end laptops. And all this in a single chip the size of a postcard.

But Nvidia's real ace in the hole is Blackwell GB200, a "superchip" that fuses two B200 GPUs with a Grace CPU in a single package. This 30 petaflops beast promises to deliver up to 30x the performance of the H100 on large language model (LLM) inference tasks, with a 25% reduction in cost and power consumption. In two words: more power, less expense, less environmental impact. Yes, there were 7 words.

When one chip isn't enough, the "transformer engine" takes care of it

How does Nvidia bring out all this power? One of the secrets lies in the second generation "transformer engine": a dedicated architecture that doubles the computing capacity, placing more neurons in less space, for increasingly larger and more high-performance neural networks.

What happens when you put dozens or hundreds of these chips together in a server? Up to 576 GPUs can “talk” to each other with a bidirectional bandwidth of 1,8 terabytes per second. In three words (for real this time, I swear): extreme parallel computing.

From chips to supercomputers, it's a short step

If you want an idea of what we're going to see soon, the systems that will be driven by these Blackwells will be able to train models with 27 trillion parameters. GPT-4 boasts “just” 1,7 trillion.

Nvidia seems to be launching a challenge to the entire world of AI: do you want to go fast? Follow us. And the big cloud players seem to have grasped the message: Amazon, Google, Microsoft and Oracle have already lined up to offer these trinkets in their services. A sign that the hunger for computing power is stronger than ever.

With Blackwell, is Nvidia killing the market?

There are those who turn up their noses at this excessive power of Nvidia, which risks creating a de facto monopoly in a key sector such as artificial intelligence. It is good to ask the question. There are those who fear that this race to “bigger, faster, more powerful” could lead to an uncontrolled development of increasingly complex and unpredictable AI systems. This is also a legitimate question.

Because beyond these question marks, with Blackwell and GB200 Nvidia has demonstrated that it has its foot pressed on the accelerator of innovation. Bad. And that I have no intention of removing it.

Like it or not, the future of AI increasingly speaks the language of GPUs. And he has the sly face of Jensen Huang.