If you smile at someone and they don't respond, you will probably find it off-putting. No? Well, this robot would give you more satisfaction than some neighbor.

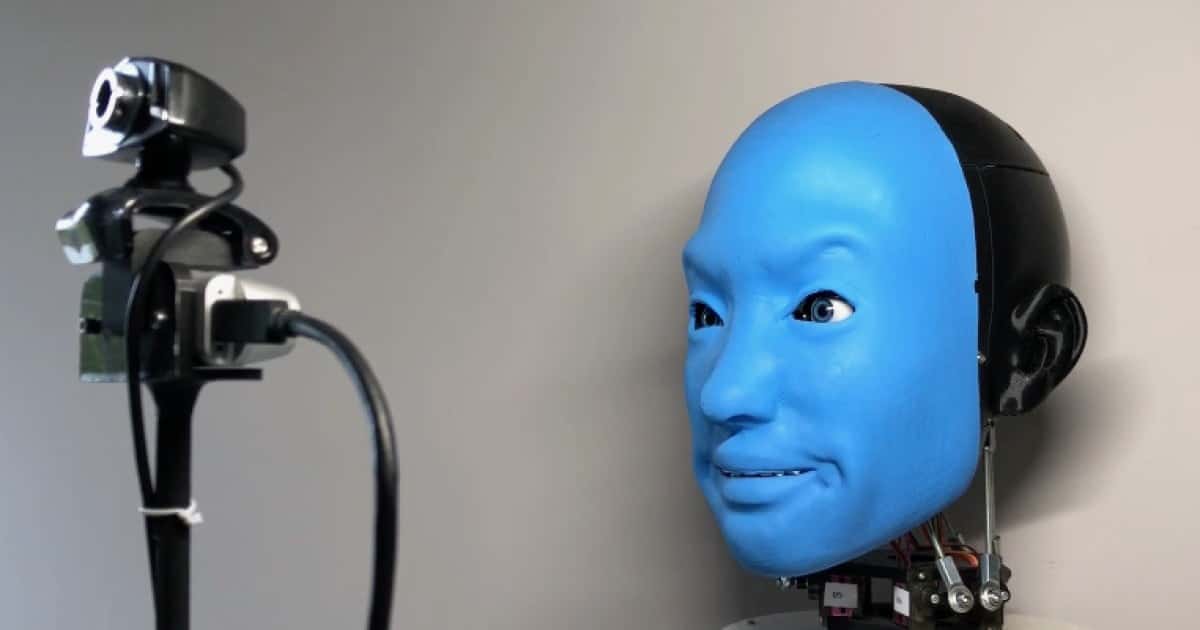

Developed by a team of engineering researchers at Columbia University in New York City, the EVA project it is actually one forehead humanoid robotics. It is designed to explore the dynamics of human / robot interactions through facial expressions. It consists of a 3D printed, adult synthetic skull with a soft rubber face on the front.

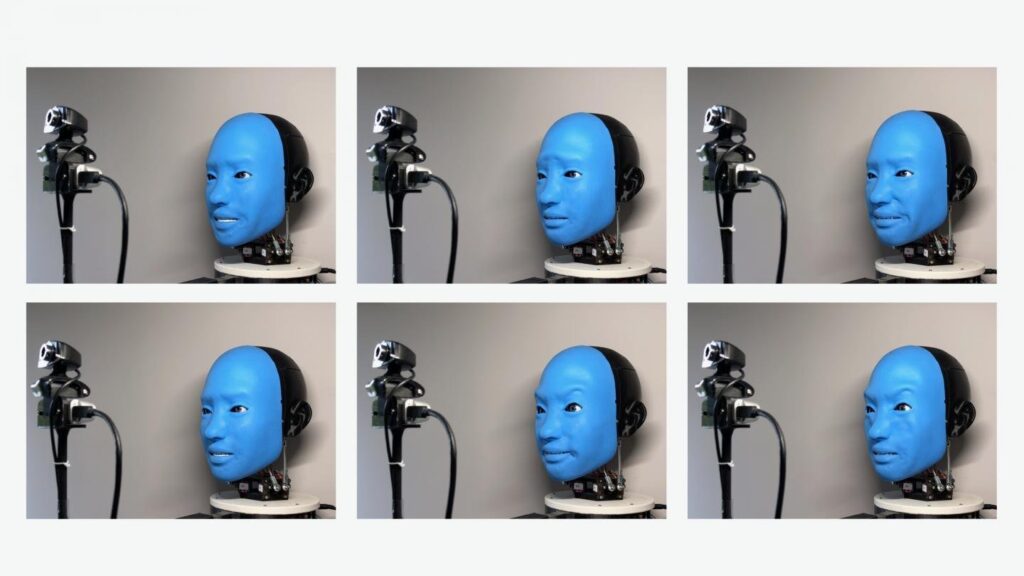

Motors inside the skull selectively pull and release cords attached to various locations on the lower face, just as muscles under the skin of our faces allow us to show different facial expressions. For her part, EVA can express emotions such as anger, disgust, fear, joy, sadness and surprise, as well as “a range of more nuanced emotions”.

A robot that mimics our facial expressions

To develop its abilities to mimic facial expressions, scientists began filming the EVA as it randomly moved its face. When the computer controlling the robot later analyzed the hours of footage, it used an integrated neural network to learn which combinations of “muscle movement” corresponded to human facial expressions.

In the second phase of "training", when a connected camera captured the face of a person interacting with the robot, a second neural network to identify that individual's facial expressions and visually match them to those the robot was able to create. The robot then proceeded to take on those “faces” by moving its artificial facial muscles.

Is useful?

Although engineers admit that simply imitating human facial expressions may have limited applications, they believe it could still be useful. It is no coincidence that so many workshops are working on the reproduction of facial expressions. For example, it could help advance the way we interact with assistive technologies.

“There is a limit to how much we humans can emotionally interact with cloud-based chatbots or voice assistants,” says project leader Prof. Hod Lipson. “Our brains seem to respond well to robots that have some sort of recognizable physical presence.”

Don't tell me the next generation of Google Nest or Alexa will be a head talking to me, because I'm stripping. I'm leaving, huh?

You can see EVA mimicking facial expressions in this video.