Merged reality, or “fused reality”. A point after which reality and virtuality will be indistinguishable: is this the declination of technological singularity?

Many AI researchers believe that we are heading towards a technological singularity in the form of an intelligence explosion. There will be a point, they say, after which artificial intelligence will improve on its own, and at breakneck speed. Beyond that point we cannot predict anything, and there are those who even fear for our survival.

What if the technological singularity was something totally different? What if it wasn't the explosion of intelligence, but of reality? In other words, what if we could no longer distinguish between a physical and a digital object?

Many early AR and VR users think this will happen. Ericsson analysts call this experiential effect merged reality. Consumers predict that the first “fused” reality experience will be found in games. VR game worlds could come close to being indistinguishable from physical reality by 2030.

At the most basic level, physical and digital experiences are already merging for all of us. Most daily activities, from talking to people to shopping, are becoming a tangle of online and offline activities. Ten years ago we were still dividing the world in two: our physical existence and its digital shadow. We used to call these halves “offline” and “online.” Today these words no longer have much weight and are falling into disuse. As long as we can buy that t-shirt or talk to that person, offline or online doesn't matter anymore. The path to merged reality is clear.

AR and VR are popular technologies that today make it possible to mix digital experiences into physical reality along the so-called reality-virtuality continuum (below). Other technologies will also be needed to achieve merged reality. We walk the path where the boundaries between the physical and the virtual world begin to blur.

Immersive body movement

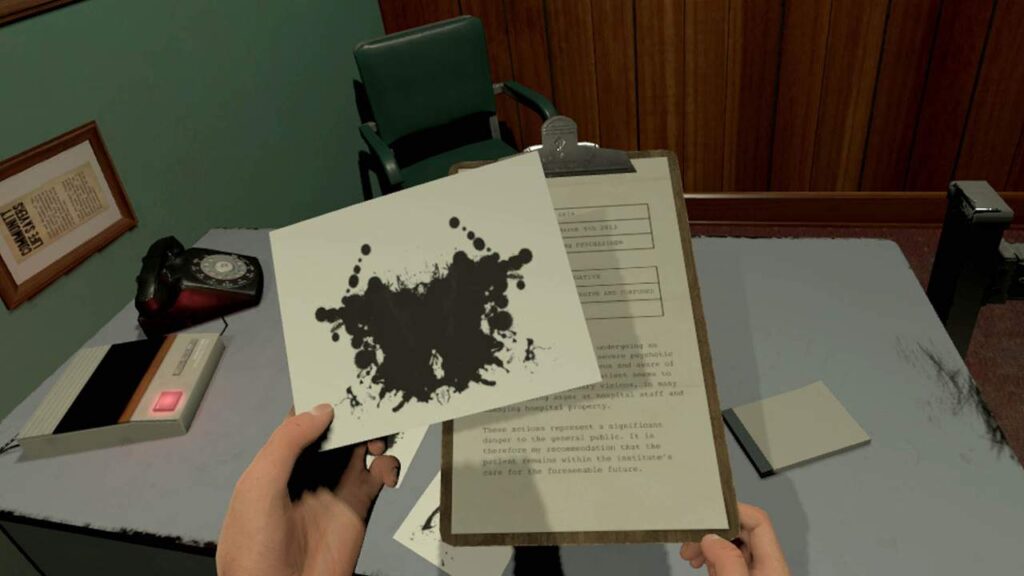

An example: A Chair In A Room: Greenwater. This is a room-scale VR game (when you physically move around a room, your movements are reflected in the VR world you are in).

What causes the extreme immersion in this game is its ability to dynamically scale the virtual world to fit the actual physical space you move through. You physically move through reality, pick up objects, and interact with the world around you. In the story you start as a patient in some sort of psychiatric ward and then find clues about your past.

This game is already the past. It came out in the fall of 2016, and so many players immersed themselves in this pseudo-reality for so many hours that they literally couldn't separate it from fantasy. If you consider that it is a horror game, you can understand the reactions (and risks).

The importance of rotation and impact

Let's move forward on the path of fused reality. Another round, another game. In this case it is the VR ping pong. Even then, players reported that they often accepted the digital ball and table as a physical reality. They start playing, and after a while they're just playing ping pong.

In this case, a fortunate circumstance had an influence: the position sensor in this game created the physical sensation of a racket, which was convincing. Many have achieved real sporting results thanks to "virtual" training.

The brain firmly establishes a link between VR practice and tangible reality.

Making the paradoxical real

One more game: Horn. It describes itself as a “ridiculously violent” VR gladiator simulator. This says it all, really.

However, in Gorn these ridiculous fights are modeled physically: this means that when you hit someone, the impact is not animated, but calculated using parameters such as speed and impact area. This also leaves room for the player's creativity. Items not made to be weapons can also be used. For example, if an opponent drops his helmet, you can use it to hit him. You can also rip off your opponent's arm and hit them with it.

An "educational" factor of this path is that people will also "make" the new space of merged reality their own by adding their own creative component, or completing the gaps with their imagination

Physics of free movement

Another physics-based melee attack game is Blade and Sorcery. Compared to the previous one, this game tries to be realistic and avoids rubbery weapons. In addition, it blocks any physically impossible movement. If you hit a wall with a hammer, it stops and your (virtual) wrist bends.

The plus in this case is in the freedom of movement. You can use physics in creative ways: you can use an ax as an ice ax to climb a mountain. This seems like a consequence not originally thought of by the programmers.

This is a step forward and backward at the same time. It is ahead because it already prefigures a physical reality "homogeneous" to the virtual one. It's behind because playing it will require much more advanced interfaces. Not necessarily Ready Player One's haptic suits, but certainly more advanced devices than those under study (as this, eg. Or this one).

VR resistance

The game where all previous advances come together is boneworks , a fully featured, multi-hour physics modeling adventure. This game adds a unique feature: push-back modeling. What does it mean? That if you push too far on something that doesn't move you are thrown back (in the digital space). In Boneworks, the physics of pushback is applied not only to movement but also to the weight of objects, creating a new level of realism and immersion.

Here we are already in more recent times, we are talking about 2019. And it has generated frenetic activity in the online VR community. People exchanged advice on how to find shortcuts while climbing, how to use heavy objects to block doors, etc. In short, everyone tried to play in ways not intended by the creators.

New goals, new problems, however. The problem in this case is represented by motion sickness. I think it's a consequence of the push-back model. When you quickly lift an object that is too heavy to lift, when you interact with others in ways that affect physics, etc. that constant “pushback” seems to create nausea.

Boneworks tells us that we will need devices that can handle muscle impulses and neural signals to solve these challenges and move closer to merged reality. High resolution displays, lighter VR headsets and tactile gloves won't eliminate this problem.

Mobile augmented reality

Perhaps the last tangible step towards fused reality will be the explosion of full, complete augmented reality.

Think about what was said earlier about ping pong. With friends physically present you could digitally "conjure" a table, rackets and balls. They would appear as if from nowhere (perhaps on very light new generation viewers). And the physical experience of playing together with real people in the same place would be practically like reality. Experiences like this will evolve to become much more realistic.

The convergence of other technologies like Microsoft Mesh could merge other games (like Minecraft Earth, for example) with physical reality. For this to work, of course, we need a totally different type of augmented reality than today. An augmented reality that somehow incorporates all our senses. It is no coincidence that scholars call it their own Internet of the senses , and not surprisingly it is also the main theme of the Ericsson report on consumer trends in 2030. Of those who want an Internet of the senses, as many as 40% see immersive entertainment as the primary driver of this change. A change that will see an "explosion of reality" take place.

The explosion of reality

Imagine a city center where some buildings are digital and some are physical. Imagine also not knowing, nor worrying about which are physical and which are digital, since you cannot tell them apart anyway.

I personally find it impossible to believe that cities will be like this in just ten years. But I've also made wrong predictions in the past :) What if suddenly we see AR / VR and 5G connect with advancements in interfaces Brain-Computer, With the 'optogenetics, With digital twin of our cities to create an uncontrolled combined effect? In a decade, or perhaps a decade, many things could happen.

Of course, artificial intelligence could also play an important role in the future “reality explosion”. Already today, AI is widely used for graphics processing and the results are indeed very realistic. THE deepfake are a prime example of this and it is becoming possible to build them in near real time. Artificial intelligence might even be better at building digital realities than at imitating, reconstructing, surpassing the human mind.

All things considered, AI could cause an explosion of reality even before an explosion of intelligence.