The future scene is this: equipped with a virtual reality headset and special haptic gloves, a surgeon from Boston is operating on a heart patient in Bombay, India. In the operating room, two robotic hands exactly replicate his remote movements and transmit all kinds of sensations to the surgeon: temperature, pressure, weight, resistance, touch.

Research in the field of robotic limbs is advancing rapidly with the use of more and more sensors, and this week during Amazon's Tech Showcase, re:MARS, the company's owner Jeff Bezos showed off the extraordinary technological developments of three distinct companies .

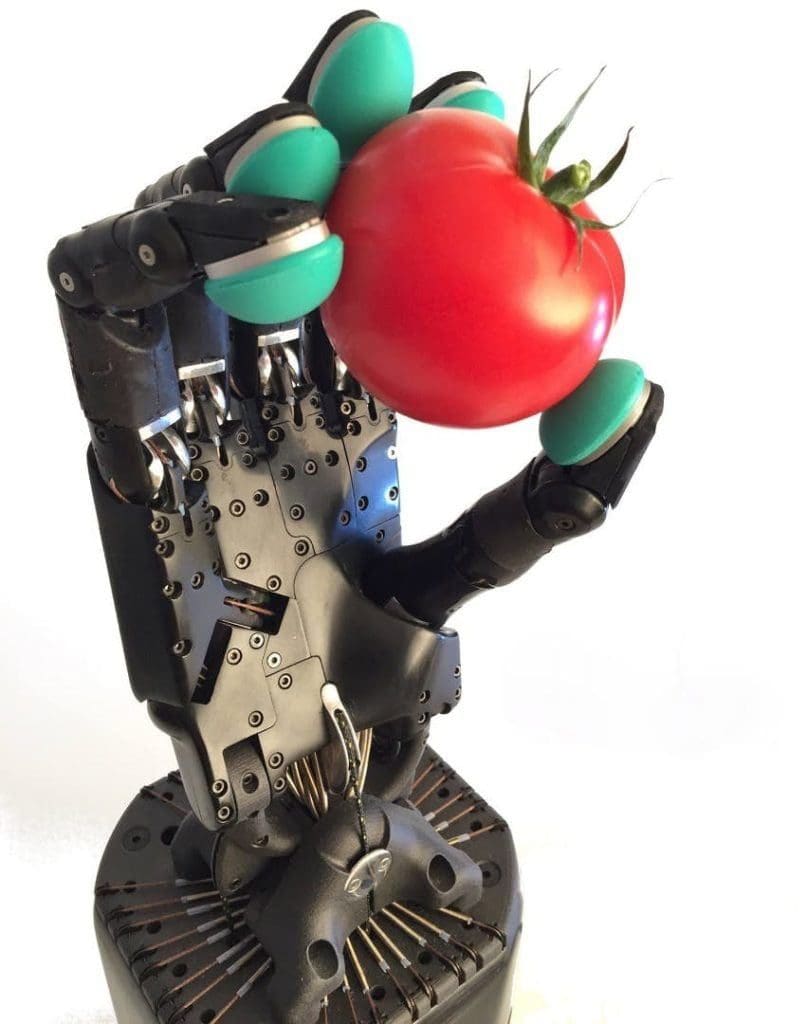

At the center of the system could be two robotic hands of the British Shadow Robot: they are capable of 24 movements and incorporate 129 sensors that transmit position, force and pressure. They can lift 5kg each.

In this week's demonstration, next-generation tactile sensors, the BioTacs, developed by SynTouch, a company born within the University of Southern California, were added to the robotic hands.

Each sensor replicates the functions of a fingertip, is surrounded by a liquid core and covered with a special "textured" skin (as if it were fingerprints). As the skin moves on a surface, the vibrations produced by friction with these synthetic "footprints" are picked up by a hydrophone which transmits data on the surface touched combined with data on its temperature.

The system is controlled remotely by wearing a pair of gloves (also equipped with 130 liquid-based tactile sensors) made by the American company HaptX.

The first tests are amazing, they provide the physical equivalent of the wonder felt listening to the first radio broadcast: Beyond this week's demonstration, a first test allowed a Californian user to write with robotic hands on the keyboard of a PC located in London.

Next generation devices will incorporate the solutions of these three companies and others, and can be used in all kinds of applications: remote rescue, bomb defusing, remote surgery or underwater construction.